The separation of a song into its music and vocals has long been a source of excitement for composers and DJs. It has also fascinated ordinary people who are used to spending a Saturday night in a karaoke bar.

The first ways to separate music sources appeared in sound editors about twenty years ago. Over the years, they have come a long way, and the results have always been directly dependent on computing power. Progress accelerated significantly with the advent of CUDA - computing acceleration using consumer GPUs. This was the starting point for the emergence of modern neural networks, which are available on every device.

1 Major milestones

Just think - I wrote my first guide to removing vocals using CoolEdit almost twenty years ago. The idea was simple and naive - vocals are usually in the "center" of a stereo signal, and if you subtract the channels by inverting and mixing, you are left with music. The result was so-so, but at the time it seemed like magic.

The next step was commercial effects based on spectral subtraction. The range of the human voice is known, a "fingerprint" of the voice can be created and subtracted using FFT. The final result was much better, but still had a lot of artifacts. It was good for fun, but not for production. Around the same time, the idea of splitting a musical composition into melody and percussion (HPSS) was also developing.

With the advent of neural networks, there was a revolution in voice removal. No, technically the methods remained the same - spectral subtraction based on the Fourier transform - but now they were based on machine learning algorithms. The first model that really impressed me was Deezer's Spleeter. This neural network had been trained on tens of thousands of songs and was still not perfect. There were artifacts on split soundtracks, and vocals sometimes took on unnatural tones. But it was a huge step forward, and anyone could try the model. Several DAW plugins were made based on Spleeter, but its glory faded against the backdrop of the new star from Facebook. It was Demucs.

2 Evolution

The advent of hybrid full-band models raised the quality of separation to unprecedented heights; drums and bass were best extracted; vocals were still difficult to isolate. Unfortunately, the evolution of the models followed an extensive path. The size of the trained models grew by leaps and bounds. BS Roformer and MDX models take up hundreds of megabytes, making them suitable for web services, but not for desktop use. Why don't I like web services? Because you have to pay for quality, and you have to pay all the time.

Enthusiasts have nevertheless prepared a desktop version with the self-explanatory name Ultimate Vocal Remover, but you will have to accept the loss of several gigabytes of disk space. Calculate what the indirect cost of traffic will be.

3 Revolution

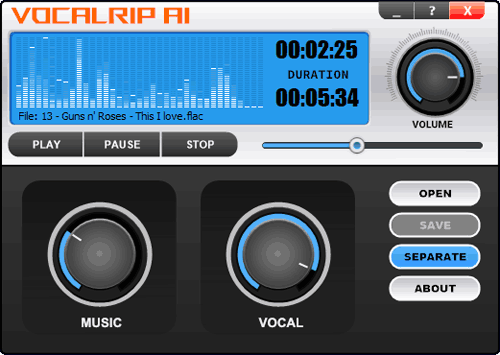

It was time to move from quantity to quality, and VocalRip was born. This model focuses on vocal extraction and has been reduced in size by more than an order of magnitude due to more complex calculations. The difference in size with UVR5 is 50 times! This allowed the developers to package it into a very modest desktop application that allows you to change the volume of individual parts of the composition independently. The advantage is that you pay only once for a lifetime license and are not tied to a network connection. If you just need a karaoke player, without saving separated tracks, then it will cost you completely free.

4 Final Thoughts

I'm sure this is not the end of the story. With the advent of new GPUs, processing speeds will increase exponentially, making it possible to create even more complex models. However, today's state-of-the-art models have already reached a level where they can be used in music production. More importantly, they are available to all of us.